DVDP: An End-to-End Policy for Mobile Robot Visual Docking with RGB-D Perception

DVDP

DVDPPublication Details

DOI: 10.48550/arXiv.2509.13024

Preprint: https://arxiv.org/abs/2509.13024

PDF: DVDP.pdf

Abstract

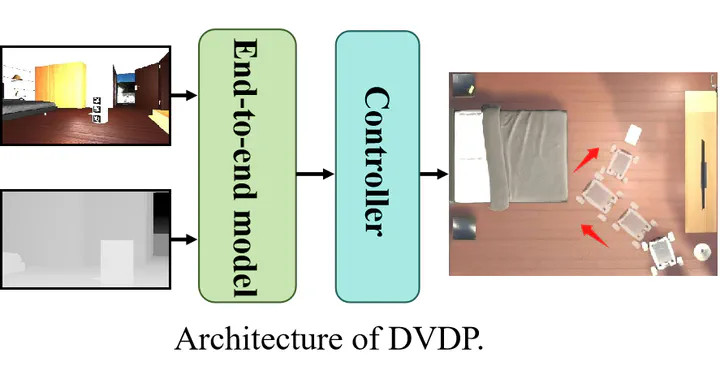

Automatic docking has long been a significant challenge in the field of mobile robotics. Compared to other automatic docking methods, visual docking methods offer higher precision and lower deployment costs, making them an efficient and promising choice for this task. However, visual docking methods impose strict requirements on the robot’s initial position at the start of the docking process. To overcome the limitations of current vision-based methods, we propose an innovative end-to-end visual docking method named DVDP (direct visual docking policy). This approach requires only a binocular RGB-D camera installed on the mobile robot to directly output the robot’s docking path, achieving end-to-end automatic docking. Furthermore, we have collected a large-scale dataset of mobile robot visual automatic docking dataset through a combination of virtual and real environments using the Unity 3D platform and actual mobile robot setups. We developed a series of evaluation metrics to quantify the performance of the end-to-end visual docking method. Extensive experiments, including benchmarks against leading perception backbones adapted into our framework, demonstrate that our method achieves superior performance. Finally, real-world deployment on the SCOUT Mini confirmed DVDP’s efficacy, with our model generating smooth, feasible docking trajectories that meet physical constraints and reach the target pose.

Key Contributions

- End-to-end visual docking policy using only a binocular RGB-D camera

- Large-scale dataset collected across simulation and real-world platforms

- New evaluation metrics tailored for visual docking performance

- Strong results vs. leading perception backbones within our framework

- Real-world deployment on SCOUT Mini with smooth, feasible trajectories