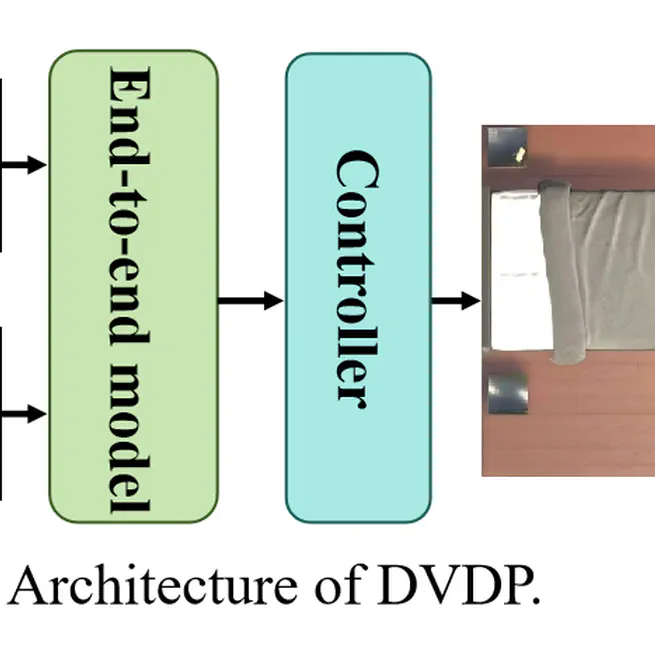

End-to-end visual docking policy (DVDP) using RGB-D for mobile robots; state-of-the-art results and real-world deployment.

Sep 16, 2025

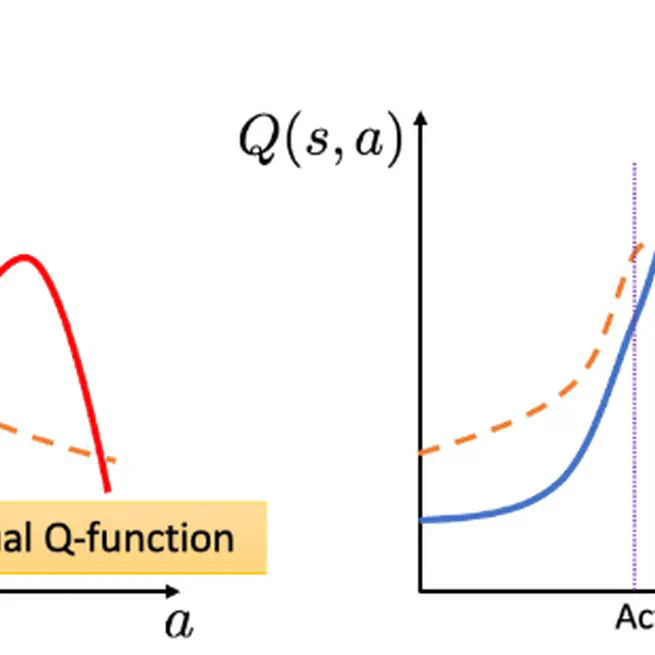

Project Duration: Sep. 2022 to Feb. 2023 Project Details Within the ROS environment based on the Noetic version, an offline reinforcement learning algorithm CQL (Conservative Q-Learning) is utilized, introducing conservative constraints into the Q-value updates Various domestic simulation environments are constructed in Gazebo, and path trajectories are generated using the A* algorithm to collect trajectory data The Rviz tool is employed to view and analyze the robot’s trajectory, with manual annotation of the optimal path The annotated data serves as a supervisory signal to train the model, with the parameters being saved Achievements A simulation path training set for the vacuum cleaner in Gazebo has been obtained. The conservative coefficient α has been adjusted to optimize the CQL (Conservative Q-Learning) model.

Feb 1, 2023